Benchmark Company, performance test ▷ Load tests, software performance and web applications

The performance test for geeks. and the others too

Stay up to date with recent happening at benchmark.

The Benchmark Company

We are an institutionally focused research, Sales & Trading, and Investment Banking Firm Working to Set The Benchmark in Promoting Each Customer’s Success Success.

History

Founded in 1988 and based in New York City With Operations Around the Country, We Cover Institutional and Corporate Customers with our Research, Sales & Trading, and Investment Banking Capabilitities. We have built a reputation for delivering superior service service, market access, and in-depth market and industry expertise.

Commitment

At benchmark, we are committed to your success. Our team of experienced professionals works closely with you to understand Your Unique Needs and Goals to Offer Sound, Unbiased Guidance by Drawing on the meaning Resources from Across Our Services Platform.

OUR CUSTOMERS

For Over 30 Years We Have Worked With A Broad Mix of Companies, Financial Sponsors and Institutional Investors Across the Globe Who Have Come To Rely On Our Focused, individualized attention and trusted advice to deliver actionable ideas and seamless execution.

Our team

Our team of experienced professionals works closely with you to understand Your Unique Needs and Goals to Offer Sound, Unbiased Guidance by Drawing on the meaning Resources from Across Our Services Platform. This collaborative “benchmark team” approache is focused solely on partnering with you to take significant value and build a long-term relations.

Company News

Stay up to date with recent happening at benchmark.

The performance test for geeks. and the others too !

You develop or want to develop software or web applications ? You are an IT or DSI engineer and work on the overhaul of a tool in place in your company ? Infogerous on behalf of a VSE or an SME ?

On a highly competitive market where you must be fast, even the first, with software or efficient web application, a reactive website, the Performance Test is essential.

The good news ? There are solutions to automate this test phase and support you in your production.

Appvizer offers you an introduction to the steps and challenges of performance tests as well as existing tools.

Performance test: little overview

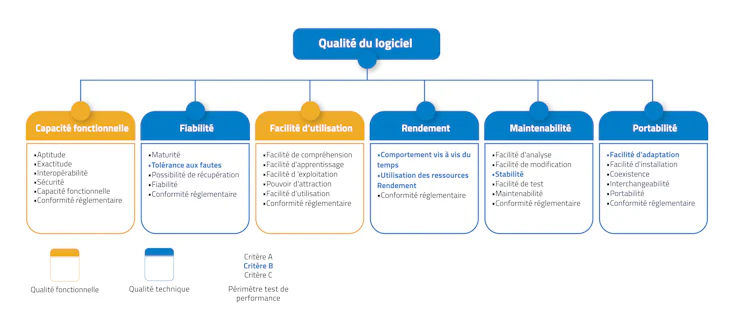

We identify for you Main performance tests, covering both Functional and technical criteria, to identify the problematic behavior of a system (bugs) and correct them.

The various results often overlapped, and time and financial resources being limited, it is rare and unnecessary to combine them all.

Ausy, advice and engineering in high technology

Performance test: definition and objectives

THE Performance Test determines the proper execution of a computer system by measuring its response times.

Its objective is to provide metrics on the speed of the application.

The performance test therefore meets a need for users and companies in terms of speed.

In the case of a continuous performance test, It starts from the start of the development phases, and is adapted to each stage of the application life cycle, to load tests comprehensive.

Neoload

The objectives are multiple and allow:

- to know the capacity of the system and its limits,

- to detect and monitor your weak points,

- to optimize its costs in infrastructure and execution,

- to ensure that it works without errors under certain load conditions,

- optimize response times to improve the user experience (UX),

- to check the stability between the production version and the N+1 version,

- to reproduce a production problem,

- to anticipate a future rise, the addition of a functionality,

- to assess the possibility of installing an APM (Portfolio Manager application, application manager),

- to ensure the good behavior of the system and its external third -party applications, in the event of a breakdown then a reconnection, etc.

The tests presented below are all performance tests, carried out under specific conditions.

The yield test

The yield test is a Performance Test more advanced, which determines the proper execution of a computer system by measuring its response times Depending on its request by users, in a realistic context. This distinction exists mainly in Quebec.

It establishes a relationship between performance and resources used (memory, bandwidth).

It meets a need for users in terms of speed and of quality.

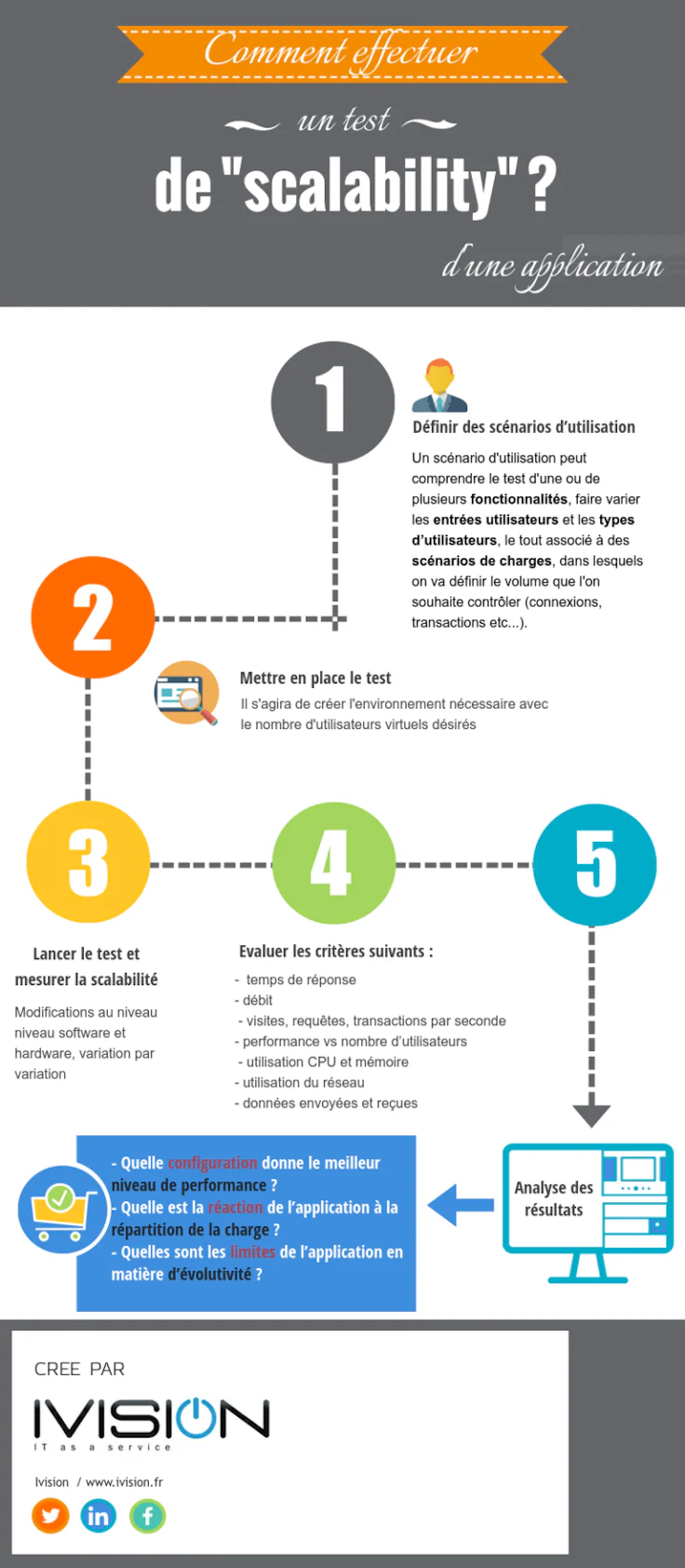

The load test (load testing) and updated (scalabibility))

THE Charge test allows the behavior of a system to be measured according to the Simultaneous user load expected, called the target population.

By increasing the number of users in stages, he searches for system limits of the system, to validate the quality of service before deployment.

He answers the question of Maximum load supported.

The stress, breakup test (stress testing))

Beyond the previous test, it will simulate the maximum activity expected all functional scenarios combined, at the highest traffic, to see how the system reacts in an exceptional context (explosion in the number of visits, breakdown, etc.)).

The test takes place until the error rate and loading times are no longer acceptable.

This list of tests is not exhaustive, there is also:

- transaction degradation test,

- The endurance test (robustness, reliability),

- Resilience test,

- The aging test, etc.

The performance test campaign

Upstream of the development of software, an application or a website, it is essential to set up a methodology with:

- the development of the specifications and the definition of objectives,

- The constitution of the web project team,

- planning, in particular testing phases,

- the balance sheet, etc.

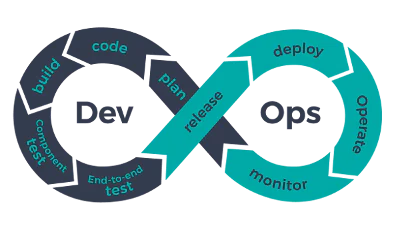

Throughout development, production monitoring takes place, also called monitoring.

We are talking about monitoring an IT environment in real time and continuous, to quickly react to the problems encountered by the ecosystem.

It is distinguished from trend monitoring, for which data is historized in order to have a long -term view of system uses, preferred features, etc.

Web project management

Agile methods are increasingly popular in web projects, in particular the Scrum method that establishes:

- Defined roles,

- an iterative rhythm (repeated and compared tests),

- specific meetings and limited in time,

- Sprint planning (delay) short,

- an approach Driven test which consists in establishing test rules before code,

- a follow -up thanks to an advancement graph, etc.

The web project manager supports his team to define objectives and execute the test campaign.

He sets up tunings (settings) to improve application behavior, to analyze the possible causes of slowdown with developers and to validate monitoring with production.

It is important that the project team (webdevelopers, web designers, traffic managers, product managers, project managers) is made up of team members build (construction) and the team run (execution) to have a vision from A to Z of the project.

These different actors adjust the tests for production according to their experiences and according to the context.

Other good practices are recommended for good web project management, in particular:

- the realization of a Proof of concept (POC), a method to ensure that the overall process is included and define everyone’s roles;

- planning of probationary periods during which the teams run take control, at the end of production, with the technical support of the teams build.

A few key steps of a test campaign

Before you start, it is important to ensure automation upstream of the test chain, data collection and generation of reports, with the right tool.

It is also essential to properly define and calibrate the scenarios so that they are representative of the expected use over a given period.

Step 1 – Identification of the test environment, perimeter:

- The components tested (front, back, storage),

- The pages tested,

- SOA architecture (dependencies between subsystems),

- architectural constraints (network equipment, distributed cache, etc.);

Step 2 – Determination of acceptance criteria (requirements or requirements):

- access/debit competition (the number of simultaneous users),

- response time,

- the display time,

- resources used;

Step 3 – Design of scenarios:

- launched when there is enough data to assess,

- documented click After click to be reproducible identically,

- simplified at first (Warm Test) to validate the consistency of the infrastructure,

- available by user type and functionality, etc. ;

Step 4 – Configuration of the test environment:

- the implementation of probes (measurement agents) in each component,

- taking into account their influence on the functioning of the system;

Step 5 – Realization of tests:

- with injector of loads and scenarios,

- collection of metrics;

Step 6 – Analysis of the results and execution of tests again:

- The search for patterns (scenarios) which prevent a good execution of the system and the component concerned,

- Drafting a diagnosis.

Choice of metrics (key indicators)

Do not select too much at the same time, at the risk of not monitoring anything correctly and losing sight of the purpose of the test campaign.

There are two types of metrics, the business and techniques.

Metrics business ::

- the number of transactions,

- The number of pages questioned,

- the response time of a functionality or a page (registration, payment),

- The most used feature,

- The number of simultaneous users,

- the number of operations per unit of time, etc.

Technical metrics:

- THE CPU LOAD : load of Central Processing Unit, either the processor load (occupation in % and/or loading time),

- the average system of the system (lOad Average)),

- network activity (bandwidth consumed),

- the activity and occupation of discs,

- the occupation of memory (RAM Usage)),

- transfer data during the test (Self -control transaction Or thrush)),

- the activity of the database,

- THE hit And miss Varnish cache (behavior of the http cache server), etc.